Explains what a digital code of conduct is and what it should contain. The digital code of conduct involves concepts such as netiquette (respectful and appropriate conduct online) and good digital citizenship (safe online behaviour).

1.1 What is a digital code of conduct?

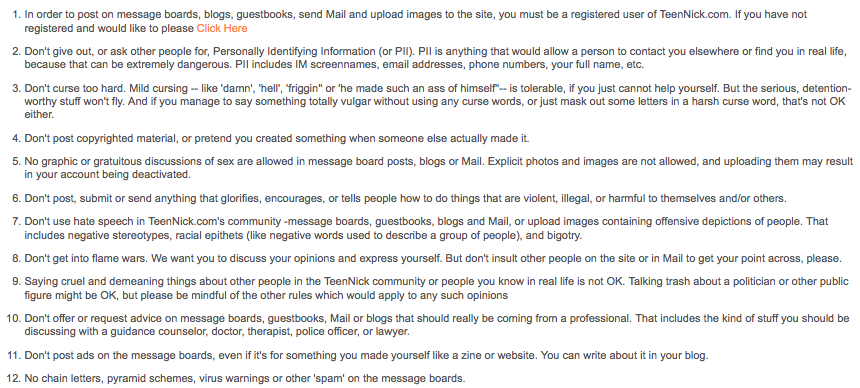

It’s a set of rules pertaining to the use of participatory media. A digital code of conduct forms the basis for user and content moderation. It’s also a valuable tool for educating your users about computer-mediated communications, in addition to helping keep your platform environment safe and respectful.

1.2 What should a digital code of conduct include?

Common topics include the sharing of personal information, disrespect toward other users, inappropriate content and disruptive behaviour (spamming [Backgrounder 11], abuse of the reporting mechanism, etc.). The code of conduct can also serve as a useful reminder of copyright principles to prevent users from inadvertently or deliberately publishing copyrighted works.

Set out in accessible language, a digital code of conduct should list (as exhaustively as possible) rights and responsibilities, expectations and the consequences in the event of an infringement. The code should specify the consequences if the rules are broken — for example, the removal of content or the suspension/closure of a user’s account.

1.3 How should the digital code of conduct be displayed?

There is no single recommendation for how or where a digital code of conduct should be displayed. Its importance will vary greatly depending on the type of platform and degree to which the platform is participatory. For example, in a social network where users have frequent opportunities to interact, the digital code of conduct may take the form of a contract that users must agree to before they can participate.

A digital code of conduct is not required by law; however, given that it forms the basis of user and content moderation, you are strongly recommended to include one. For more information on the regulatory framework that applies to participatory media, click here.

Mobile app stores do not require developers to post a digital code of conduct.

No self-regulation program specifically advocates having a digital code of conduct.

- Some platforms integrate their digital code of conduct into their terms of use. However, in youth production, you’re best off making it a separate document to show your commitment to your users’ safety.

- Ensure that parents can access your digital code of conduct at all times.

- If your audience consists of preschoolers, your code must address their parents and be posted in the parents’ section.

- If appropriate to your platform, present your digital code of conduct in a playful manner so as to prompt children to put the rules into practice.

- Avoid legal jargon: your code should adopt a familiar tone and be expressed in plain language adapted to your audience’s level of maturity. For example, use familiar formulations like “When you come here to play, you don’t have the right to . . .”

- A rule must be stated precisely. As needed, consider using examples to illustrate ideas that are more complex. A statement like “Watch your privacy! Don’t share personal information in the chat room” could be accompanied by a list of what constitutes personal information.

- Consequences should be increased in severity based on type and number of offences: issuing a warning, removing the content, suspending the account for a day, closing the account.

- Make participation contingent on your digital code of conduct: have users check a box to signal their acceptance (“I have read and accept…”) before allowing them to access your platform.